News

Feb 19, 2026

Insights

Scaleups

Artificial Intelligence

Americas

NewDecoded

3 min read

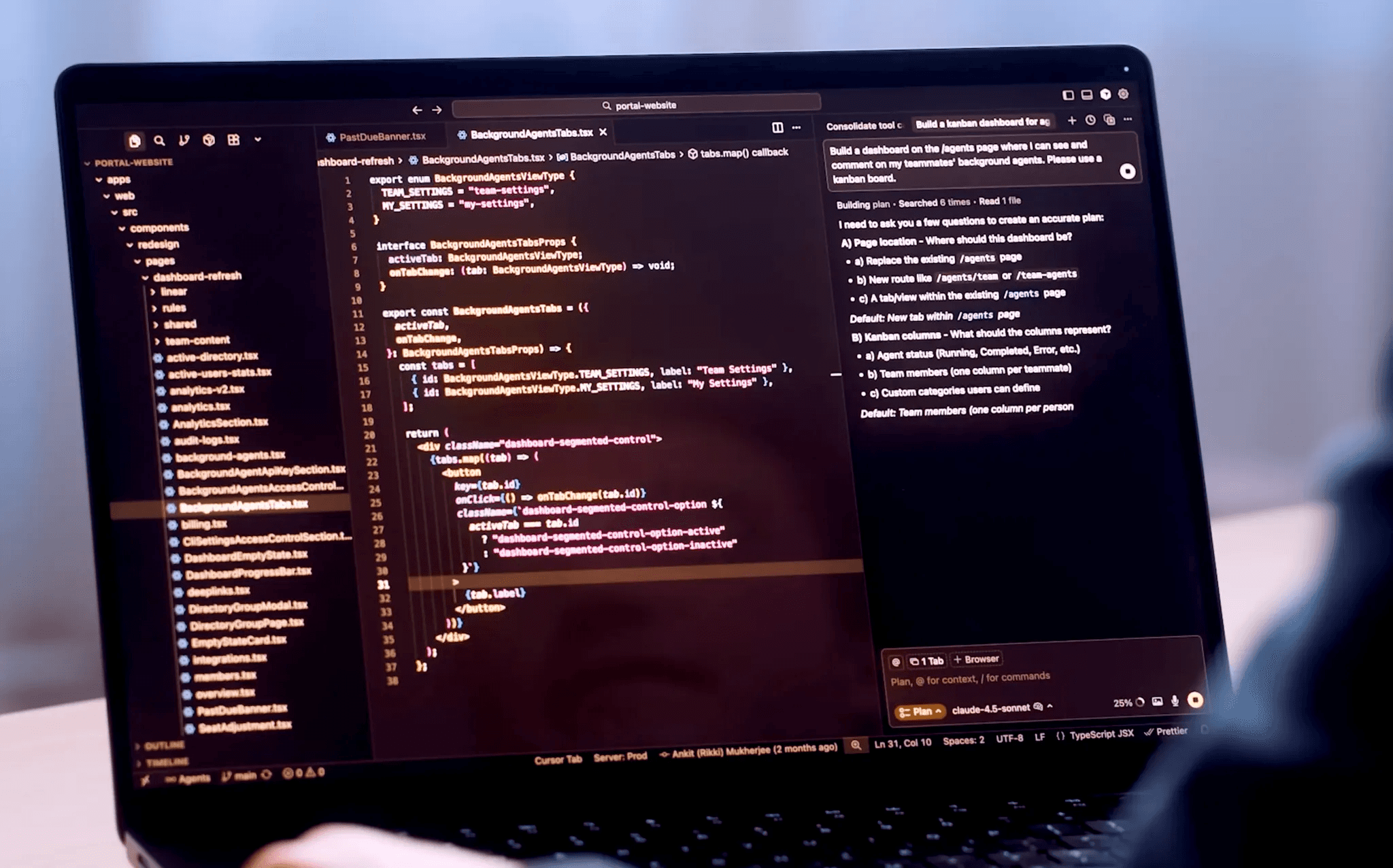

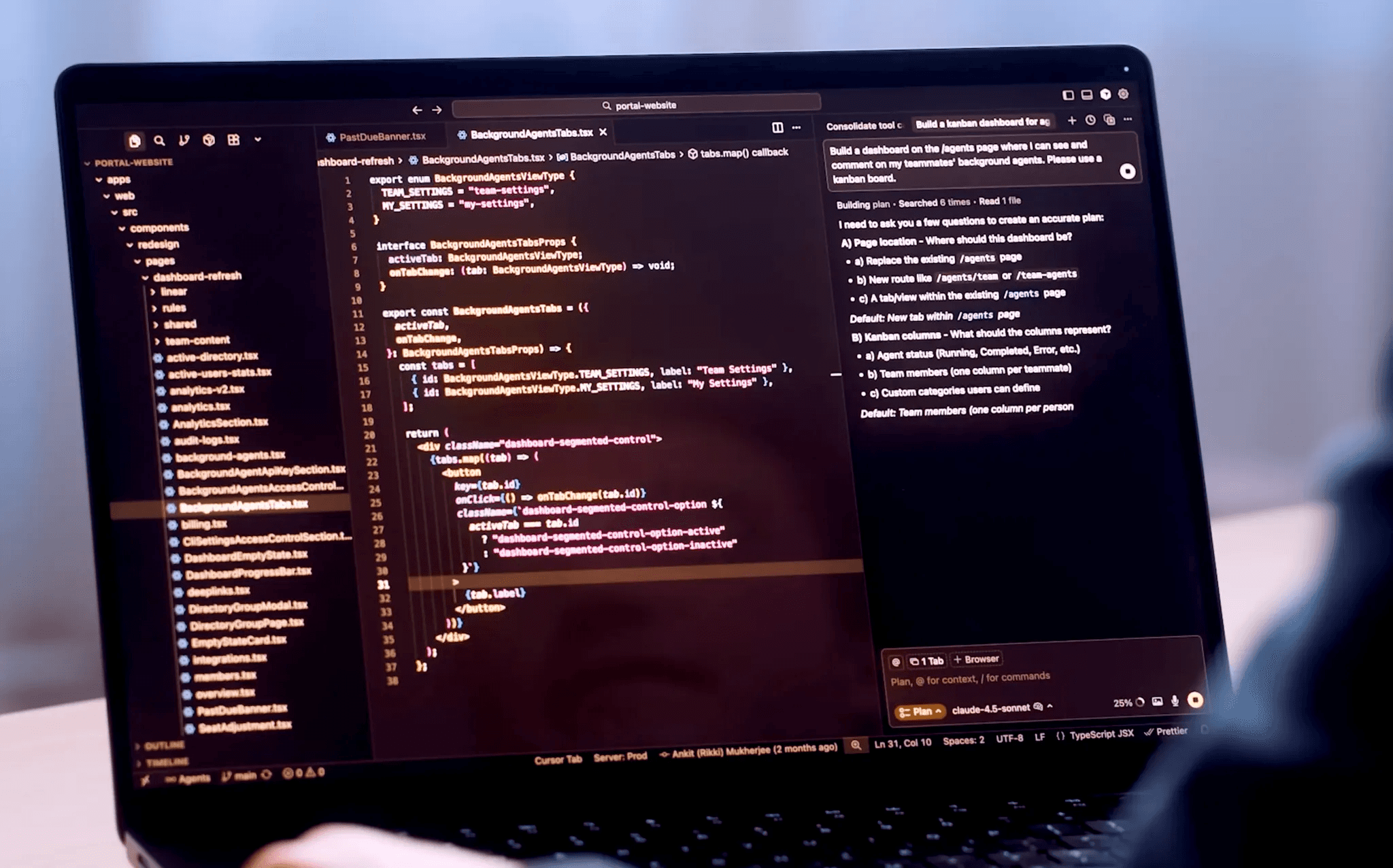

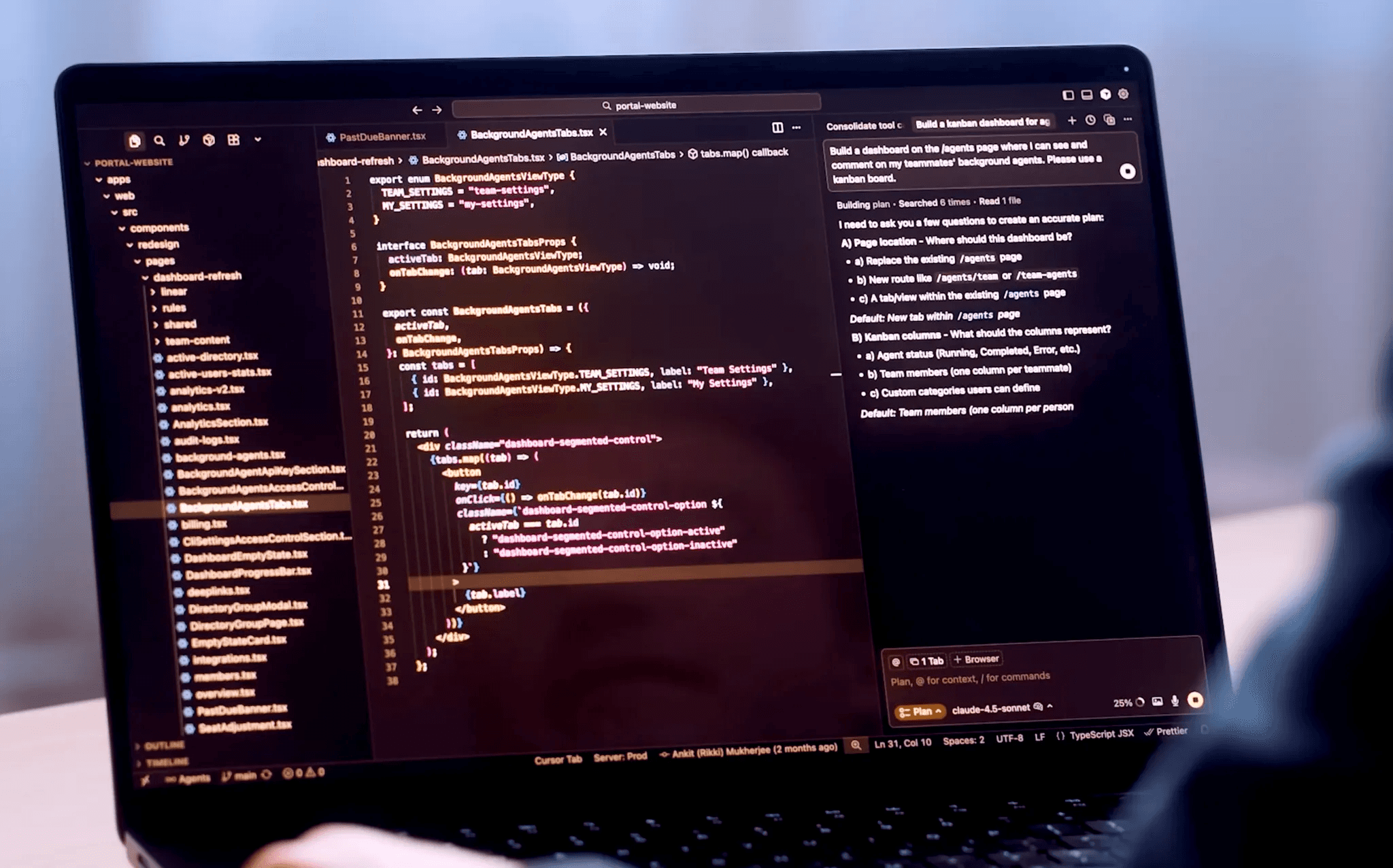

Image from Cursor’s YouTube

Companies using Cursor's AI agent merge 39% more pull requests compared to baseline groups, according to a new study by Suproteem Sarkar, an assistant professor at the University of Chicago. The research analyzed data from tens of thousands of Cursor users to measure real-world productivity impacts. Critically, the study found that PR revert rates remained unchanged and bugfix rates slightly decreased, suggesting code quality stayed stable despite higher output.

The study reveals a counterintuitive pattern: senior developers accept agent-generated code at significantly higher rates than junior developers. For every standard deviation increase in years of experience, developers show approximately 6% higher agent acceptance rates. This contradicts expectations that less experienced developers would rely more heavily on AI assistance, but suggests that using agents effectively requires skill in managing context, evaluating generated code, and defining clear requirements.

Analysis of 1,000 developers found that 61% of conversation-starting requests involve implementation tasks where the agent generates new code. The remaining requests split between explaining existing code or errors and planning actions before coding. Experienced developers are more likely to write plans before generating code, which may explain their superior results with agent assistance. The study examined both request frequency and acceptance rates to understand how developers interact with AI-generated code.

The University of Chicago research compared organizations already using Cursor before the agent's release against baseline groups without Cursor during the analysis period. Beyond the 39% increase in merged PRs, the study found that average lines edited and files touched per merged PR did not change significantly. This suggests developers are handling more tasks rather than simply expanding the scope of individual pull requests, pointing to genuine throughput improvements rather than artificial metric inflation.

This study arrives amid heated debate about AI's actual productivity impact on software development. While another recent study by METR found individual developers took 19% longer to complete tasks with AI tools in controlled settings, the University of Chicago research shows opposite results at the organizational level. The divergence likely reflects different contexts: laboratory conditions with developers new to the tools versus real-world workflows where teams develop proficiency over time.

The finding that experienced developers outperform juniors with AI agents suggests a meaningful skill gap is emerging in the industry. As companies invest heavily in AI coding assistants, this research indicates that effectiveness depends not just on tool quality but on developer training and organizational adaptation. The stable quality metrics despite higher output also challenge concerns that AI assistants encourage rushed or careless code.